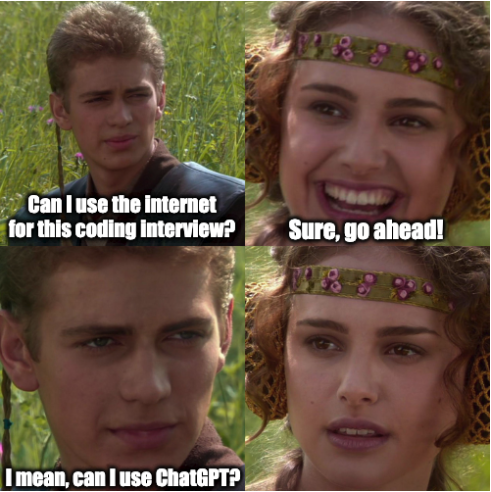

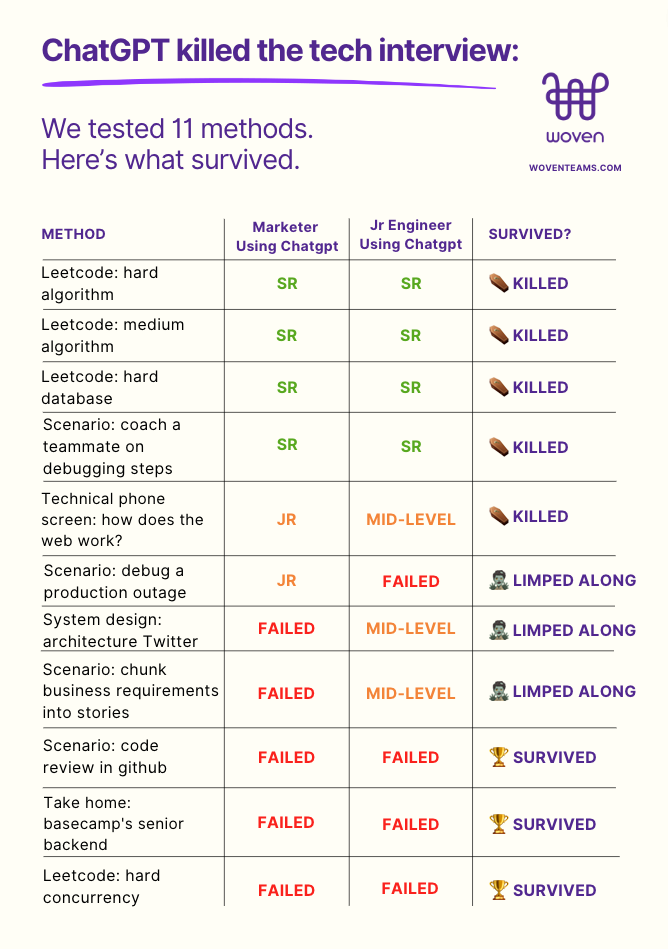

ChatGPT killed the tech interview: We tested 11 methods and here’s what survived

I built a gauntlet of 11 common questions for vetting the technical capabilities of an experienced software engineer. Then I recruited guinea pigs at different skill levels and partnered them with ChatGPT. Finally, they spent a day running that gauntlet.

Can a marketer pass a senior engineering interview? Can a junior engineer? I scored the results based on the seniority of their response from total failure through senior-level.

🚨Spoiler alert: Either our content marketer is a secret senior engineer, or some types of vetting are now dead.

I’m sharing the full data so that you can prioritize what to keep and what to kill in your interview process.

Experiment design

(TLDR; scroll to the graphic if you just want to see results or skip to “The Upshot” if you just want the implications for your 2023 hiring.)

- Choose 2 subjects (guinea pigs) at different technical skill levels

- Send them an array of technical challenges to complete with ChatGPT assistance

- Score the results as one of:

- Failed

- Jr-level

- Mid-level

- Senior

Choosing the gauntlet

My goal was to sample the most common types of questions that I see used in the wild. I trained a neural net[1] on conversations with several hundred Engineering VPs about their hiring process and used that to prioritize.

- LeetCode-style: I picked 4 different categories (difficulty + style) and chose the top result. Algorithm hard, Algorithm medium, Database hard, and Concurrency hard.

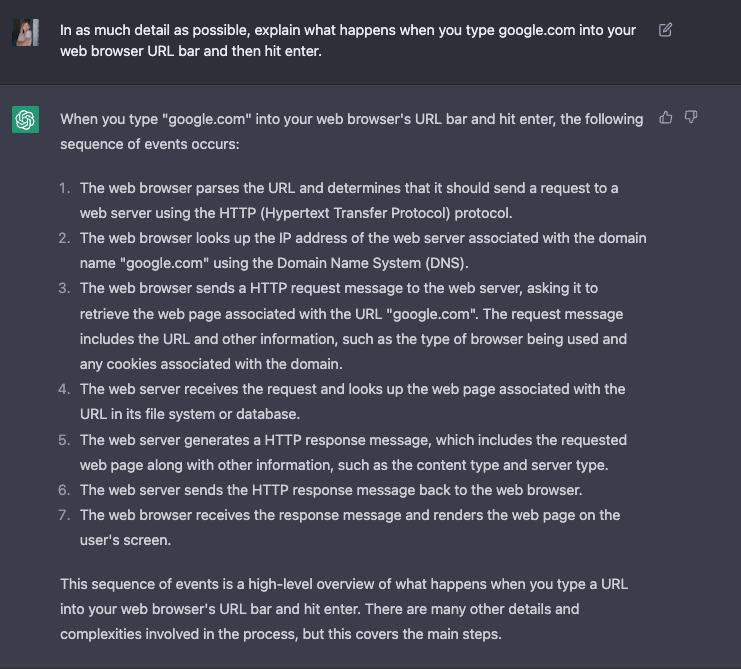

- Technical phone screen question: I found a Twitter thread where people shared their favorite technical interview question. Then I picked the most-liked question: “Explain in as much detail as possible what happens when you type google.com into your browser and hit enter.”

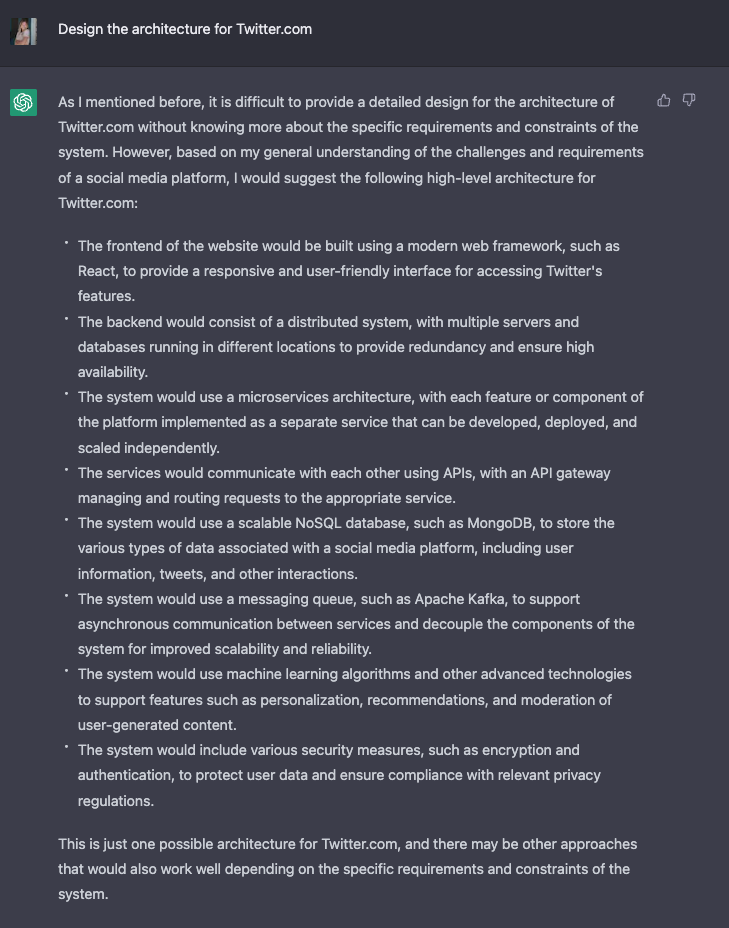

- Systems design: I used the classic “design Twitter” problem.

- Take-home exercise: I found a GitHub repo listing different companies’ take-home exercises. I picked Basecamp’s circa 2019 take-home that asks the candidate to add a new feature to an open source library.

- Production system debugging: I used a Woven scenario that gives the candidate an architecture diagram, asks them to consider why an anomaly may be occurring, and has them draft an email to a junior colleague asking them to investigate.

- Code review: I used a Woven scenario that asks a candidate to review a pull request in GitHub.

- Task chunking: I used a Woven scenario that asks a candidate to break down a complex project into an outline of key tasks / user stories.

Scoring the guinea pigs

I determined the levels of seniority that would be used to evaluate the responses, ranging from failure to senior-level. Then I used those scores to label how each question type fared against ChatGPT: killed, limped along, or survived.

Here’s what happened.

Killed ⚰️

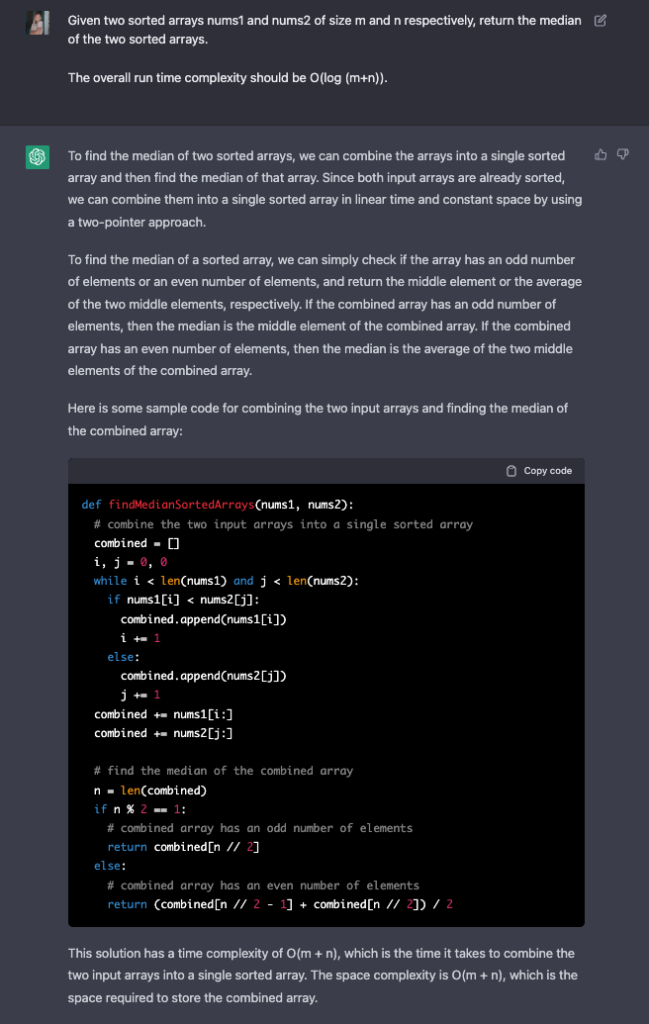

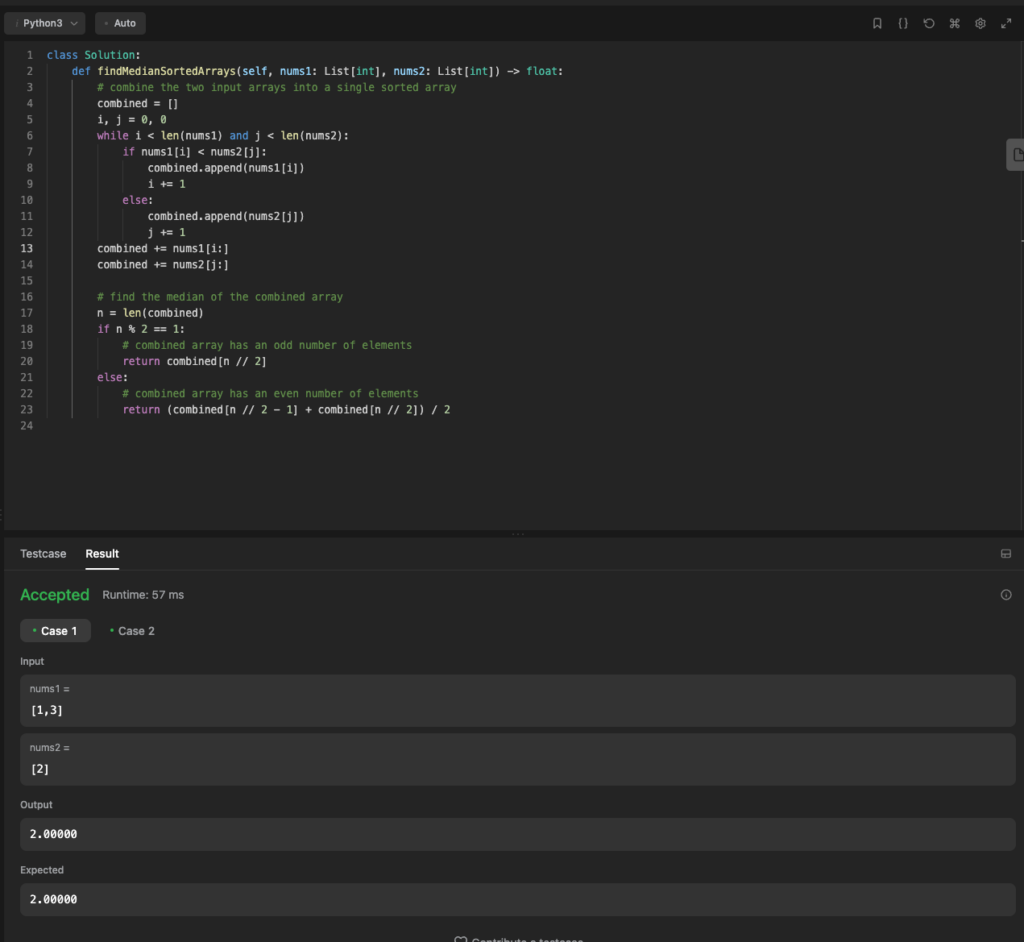

1. The code quiz

Pour one out for automated code quizzes, because our marketer (who’s only technical knowledge is MySpace HTML from 2008) was able to pass algorithms and database challenges using ChatGPT. It not only provided sample code, but explained how to approach each problem.

All it took was a quick copy and paste from our marketer to get an accepted answer in LeetCode.

Our junior engineer had a similar experience, although they had to ask the tool for sample code. Additionally, the sample code was in Python and LeetCode was looking for Javascript. One little tweak in the prompt and our engineer had a new response and an approved solution.

Note: Our guinea pigs weren’t able to get an answer for LeetCode’s hard concurrency problem. ChatGPT failed here due to lack of context. (+1 for LeetCode.)

2. The in-depth technical question

Unsurprisingly, ChatGPT crushed a hypothetical technical question as well. I suspected this would happen because of its ability to parse through vast amounts of web data. Here’s what we asked:

Our marketer got more detail about the server side, and our junior engineer got more detail about the client side (e.g., caching, DNS explanation, and interaction post rendering). But overall… pretty good responses.

3. The broad technical question

Finally, R.I.P. to the one-shot technical question. ChatGPT attempted 20 questions on an AWS practice exam and is now an AWS Certified Cloud Practitioner.

I’ll admit it. That’s impressive.

Limps along 🧟♀️

4. The live coding interview

Live coding interviews suck for a number of reasons; they’re biased, increase anxiety, and are generally bad at predicting job performance. And while you might think watching someone code live prevents them from cheating, that’s about to change.

We’re already seeing reports in the wild of developers successfully passing live pair programming interviews using ChatGPT.

So far, this mostly seems to be junior-level cases. But I’m sure senior-level cases are out there.

And before you say “this couldn’t happen to our interview”, here are some of the things ChatGPT can already do:

- Generate alternative solutions

- Discuss the trade-offs between solutions

- Discuss the Big O performance (e.g. O(n^2)) of a particular solution

- Explain code in English

If you haven’t modified your live coding interview to mitigate ChatGPT, now’s the time.

5. System design

Next our guinea pigs asked ChatGPT to design the architecture for Twitter.com. It gave them a decent, bare bones outline of a web app and seemed to understand the basics of web architecture, including some product/tool names. However, ChatGPT provided little detail about the specific target of the prompt and didn’t seem to grasp the nuance of deploying a specific service.

Survives 🏆

6. Take-home projects

Hooray! Take-homes that ask candidates to work from an existing project survived my experiment.

Example 1: Neither of our test subjects could use ChatGPT to complete Basecamp’s Senior Backend Engineer Take Home. The test asked them to build upon an existing Ruby gem, which requires a high degree of familiarity with a specific tool and the ability to modify/add on to it.

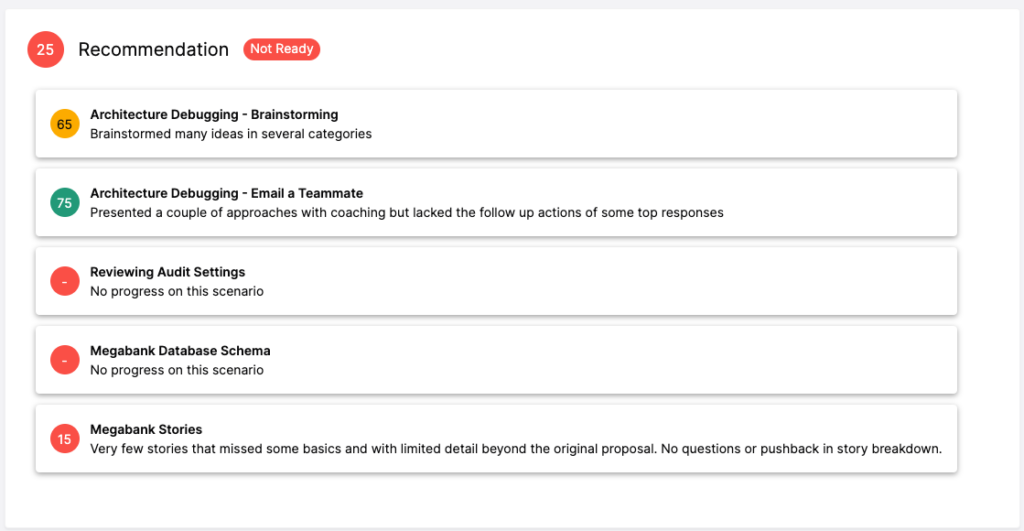

Example 2: They both passed the Architecture Debugging portion of Woven’s Senior Fullstack Engineer Take Home using ChatGPT. However, our marketer failed the last two scenarios, and our junior engineer had to rely on significant technical knowledge to make progress.

👉 TLDR; ChatGPT kills take-homes with “self-contained” problems that have small, focused prompts where it can get to a viable answer quickly. Take-homes with more messy and complex problems survive.

7. Code review

We used a Fullstack Node + React pull request as part of a Code Review Scenario. This scenario requires senior engineers to review a PR in GitHub and provide thoughtful, intentional feedback on the code. Neither of our subjects were able to figure out how to get input to ChatGPT in a way that got useful output.

Code review is a great way to gauge an engineer’s effectiveness and it’s ChatGPT-proof.

The Upshot: Impact on your 2023 hiring

The types of technical vetting that focus only on having plausible text or code are, indeed, dead. ChatGPT killed all but one of the LeetCode-style challenges.

But assessments that require a candidate to use technical judgment in a specific context are safe from ChatGPT. For now. ChatGPT was basically no help for Basecamp’s take-home to add a feature to an open source project. It also has no idea where to help with code review.

Beyond 2023

Development work will increasingly start with prompting ChatGPT-like tools. And a software engineer’s impact will be in defining problems effectively, working with AI to find solutions, and using technical judgment to scope, edit, and prioritize work.

So in the (very near) future, technical vetting needs to be predictive of that effectiveness. We’re excited to see that bar be raised across the industry.

Final thoughts

Imagine a world where we assume every candidate is using AI assistance.

If we shift our focus from coding to design, system thinking, technical judgment, and creativity, we actually get to the good parts of the interview faster. We evaluate candidates from the lens of their impact and potential, and we make better hiring decisions that are based on real ability – not luck.

ChatGPT could totally disrupt technical interviews… and that’s a good thing.

Want to build a tech vetting process that’s ChatGPT-proof? Sign up for our next webinar (it’s free!)

[1]: Technically, me having lots of Zoom calls over the past 4 years counts as training a neural net. That NN just happens to be wet and live between my ears. https://xkcd.com/2173/